Scientific knowledge management & artificial intelligence: how it works

Have you noticed that we are flooded with papers and patents, and traditional tools are severely limited at searching, filtering and extracting data from the abundance of literature?

Artificial intelligence tools are more suited at this, and today we share how.

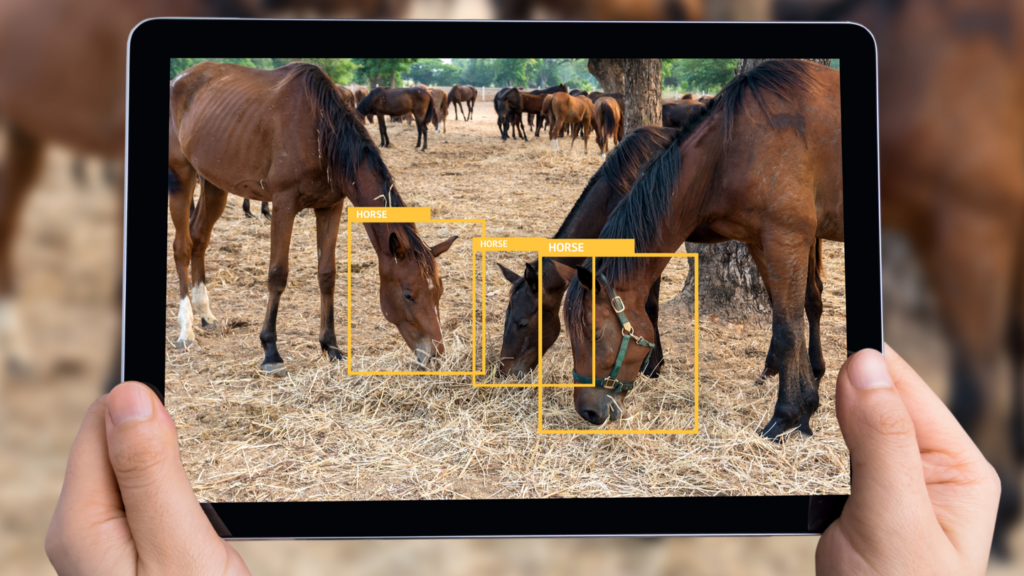

When I was a kid, I tried to learn how to ride a horse. I took a few classes, and it was an absolute disaster. At some point, the stallion even stepped on my foot. It was clear that I had no talent, but I learnt one thing: how to recognize a horse.

It’s the same with the AI engine. You train it to recognise your horses, which can be specific types of medication, materials or anything else that you might be researching. When trained, the engine recognises documents and key data points that are relevant to you (yes, your horses).

Core areas of AI and scientific data management

Lots of companies continuously analyse competitors’ patents to understand what competitors are developing and where there are gaps of innovation that they can leverage. It’s tricky to navigate a noisy market of thousands of patents.

Traditional tools for navigating the ocean of papers and patents have great limitations, as they don’t capture the context of the research and researcher. Using AI tools help researchers find relevant information based on their specific problem and research domain.

There are three core areas for AI and scientific data management:

- Finding papers and patents

- Narrowing down large lists of literature

- Extracting key information.

But how do you get started?

With any artificial intelligence systems, you need to train the machine in your domain. Iris.ai tools come with some standard features: the machine reads data in texts, tables and images, it understands English, and it reads PDFs.

The steps are simple:

- To set up the engine in your domain, we need a good description of the field that your company works in – for example types of diseases, materials, medications, or whatever else you work with. It only takes a few days for the machine to train itself.

- Then, we provide a feedback session with your experts to verify initial results. We know AI, but you are the domain expert in your field. That’s why we spend about four hours going through initial results to tweak the machine.

- Finally, for data extraction we define the output layout with you. How would you like the data to be extracted and to which source (Excel, APIs or something else).

As long as you work in the overall same field, it will be sufficient to train the engine on that specific topic. However, if you are part of a major conglomerate that works across a large and very broad number of topics, it might be beneficial to have a handful of trained models in different areas. Most smaller providers or departments will not have this need.

All in all, it takes less than a day in man-power to get started.

Finding relevant literature with AI is like having an assistant helping you out

Traditional search tools are based on keywords, which are limited. It means you need to know the specific keywords that are used in your field to find the relevant scientific documents. Unfortunately, there will always be important documents missing unless you will create multiple search queries with every synonym possible.

Artificial intelligence enables researchers to discover relevant literature, using an existing text in the specific field. For example, you can upload a clinical paper about a specific disease to an AI tool, which will find the most important words in that paper, identify synonyms and hypernyms, and match that to documents in the databases. This is often called a content-based recommendation engine. The engine scores other literature 0 to 100% (100% being a duplicate) based on how similar it is to the virtual fingerprint of the initial paper uploaded.

In other words: you don’t need to think hard about all the keywords you need to use (or not use!). Simply, you search with a paper, and the AI engine — like a research assistant — will dissect it for you, and find relevant papers.

Let the AI machine read for you and narrow down large lists of literature

This type of tool is often the step after finding literature, when you have collected a large list of patents or papers that you need to analyse.

To put it into context, let’s assume that you’re doing research in breast cancer, and you’re looking for papers about predictive genetics testing and a specific gene mutation.

First step is to write a 100-200 word description of breast cancer to the engine as the context of your research. Next, you add a description of the genetics testing to the AI engine. Finally, you add the name of the specific gene mutation that you’re researching.

After uploading the documents you want the machine to read, it scans through and analyses each one to find those papers and patents which are relevant to breast cancer, relevant to the predictive genetics product and has that specific gene mutation.

Peer-review tests have found the AI engine to produce a reading list with more than 90% accuracy.

Extracting key information from papers and patents

Data extraction is particularly powerful when for example R&D teams are analysing competitive patents or pharmaceutical researchers extracting the most important information from clinical trial papers.

You can extract specific product names, experiment data etc. from tables, texts and images.

Important distinction: there are many tools that can simply extract data from tables and texts, but few can link and match data which is dispersed in the text.

What does that mean?

Various experiments and tests can be described in a patent, and the data is often obscured to make it harder to read for competitors. For example, if the data from a specific experiment is presented in both tables and texts, the AI machine will understand that all the data points relate to the same experiment, and collect the data points in the output format.

And how do you know whether you can trust the results?

In AI and machine learning, we use a concept called self-assessment and precision and recall to determine the engine’s confidence in its own results. Basically, you can see how confident the machine is that it got each data point extracted accurately, and you can do spot checks to verify.

Depending on the type of patents or papers, we estimate that the machine can extract 60 patents in a couple of minutes.

How a global steel manufacturer automates data extraction with AI

We are working with a global steel manufacturer who continuously analyses patents to understand what their competitors are developing and where they can leverage gaps in the market.

We met our customer, Head of R&D, at an industry event, and he approached our CEO, Anita, after she spoke on stage.

He told Anita that it takes about a full month for his researchers to extract key data from 60 patents. A typical patent has around 20 pages and includes 20-30 tables of key data points, making the process monotonous, slow and prone to error. And by the time they’ve extracted data from the most important patents, new ones are already published.

Together we trained the AI engine in their research domain, as explained earlier in this post, but we also set them up with our literature search tool. That way, they could use one relevant patent as the search to find other patents, and when the researchers found relevant patents, they could simply click extract to fetch all the key data.

Initially, it took our client about one month to extract data from 60 patents, based on their own calculations. Using the AI engine, we saw that about 60 patents were extracted in 2 minutes.

The real benefit however, is the newfound time they have which they can spend on exploring new research challenges, different types of steel and experiments. The researchers could shift their time and energy from manually processing the patents, to analysing them — which enables them to find gaps in steel innovation they can leverage to stay ahead of competitors.

The wrap up…

Research is changing, as more and more papers and patents are being published, and the barrier to publishing is lowered by open access platforms.

AI enables researchers to filter out the noise and focus on the relevant information.

Training the machine in your research domain is quick. It takes about eight man hours. Once the engine is trained in your research domain, you can reuse it as much as you want.

There are three core areas for AI and scientific data management: finding papers and patents, narrowing down large lists of literature and extracting key information.

It’s all about being in control of research, using AI. It will give you the headspace to get ideas and work on the more value-adding activities.

We’d love to talk to you about the possibilities. Just reply to this email, or go here.